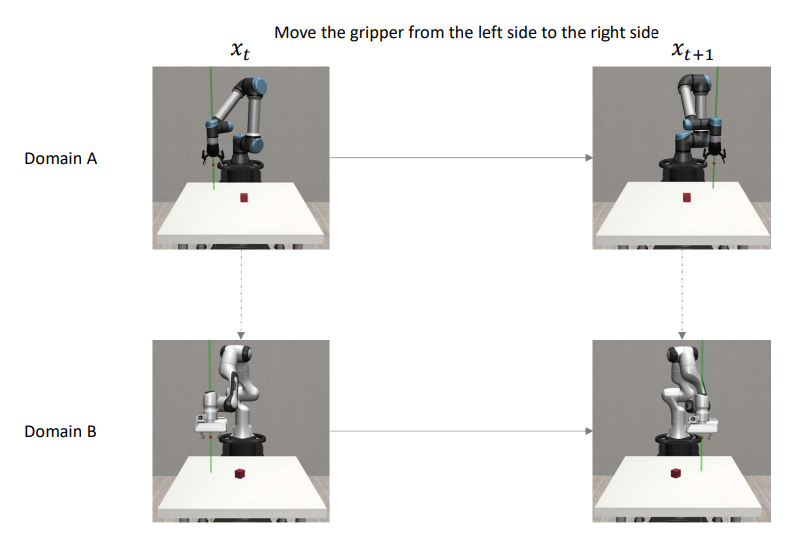

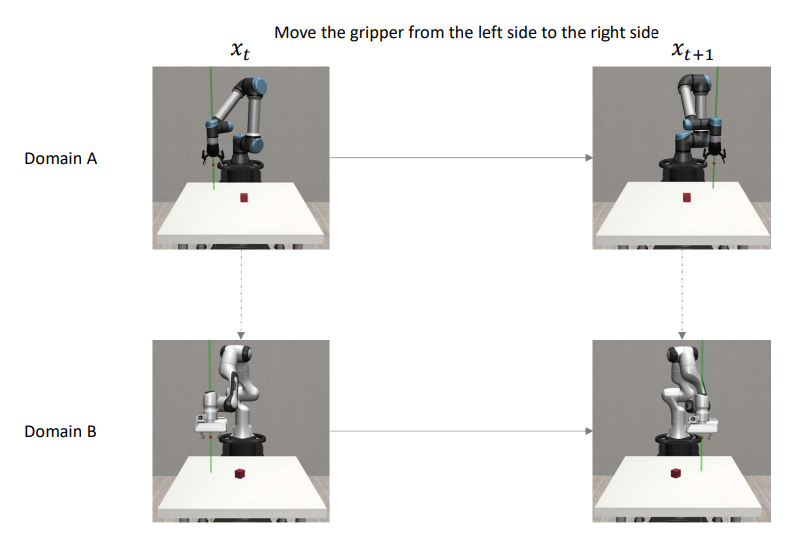

Motivation

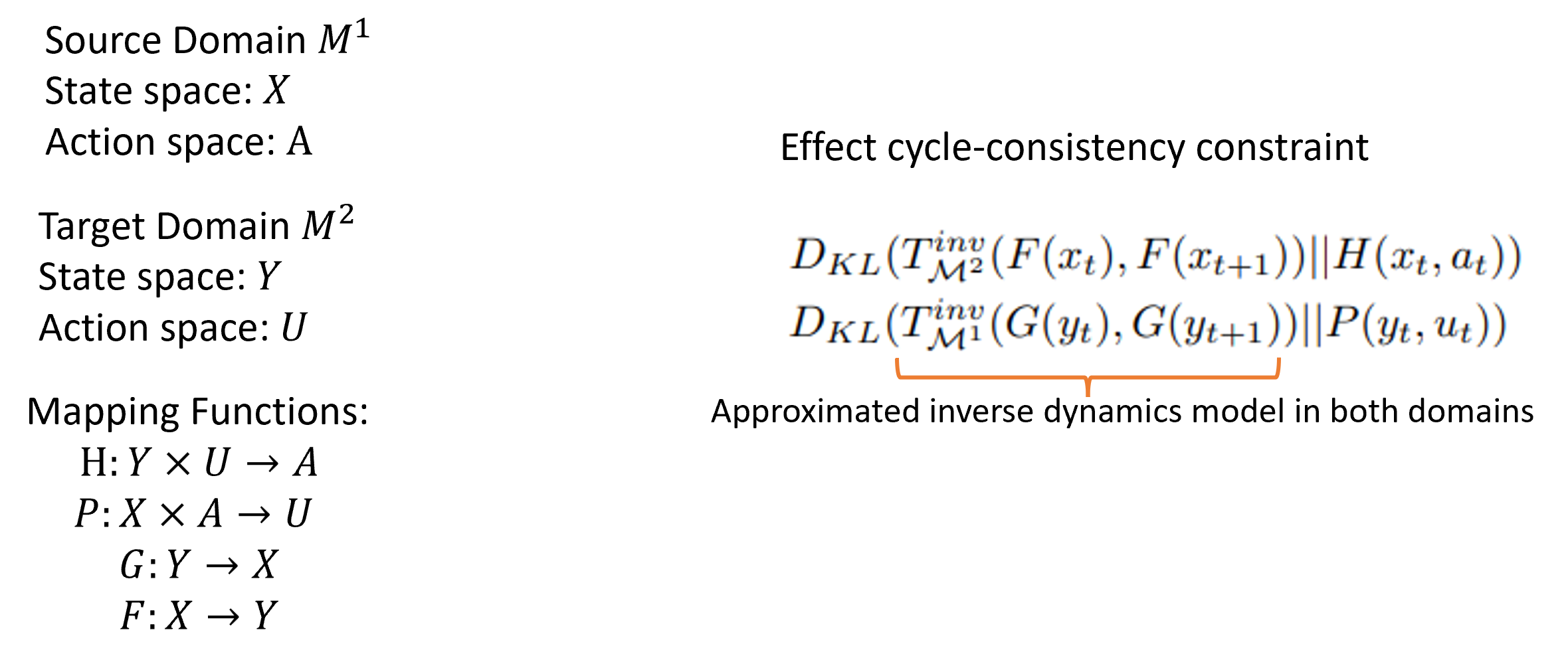

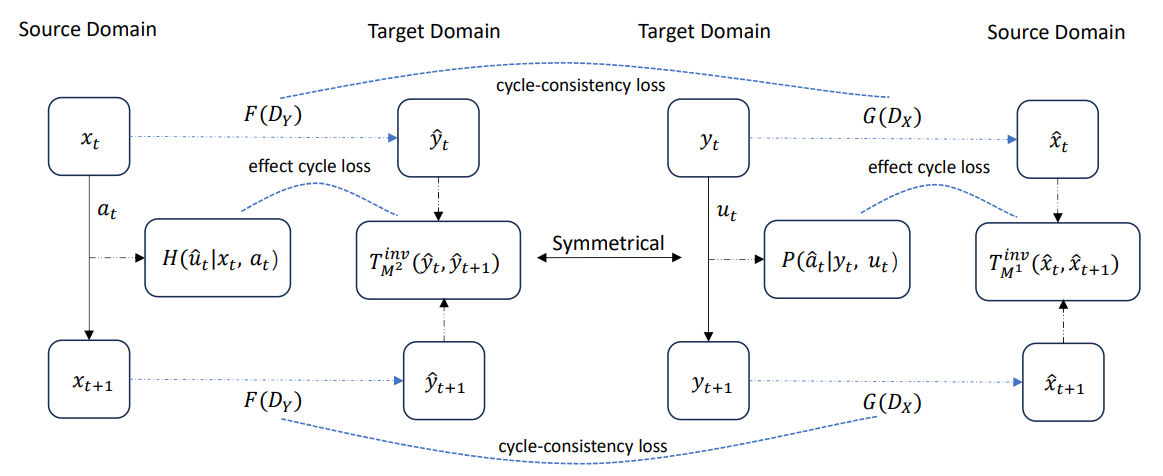

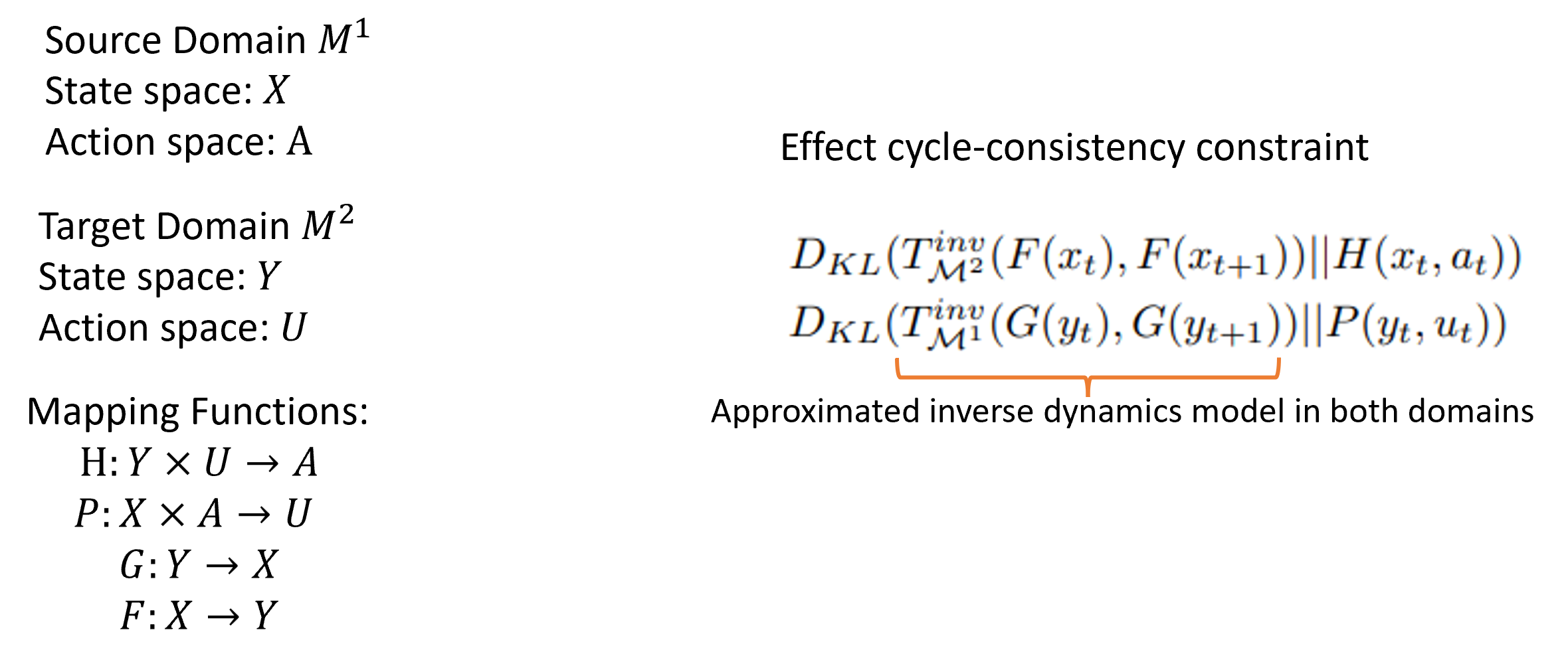

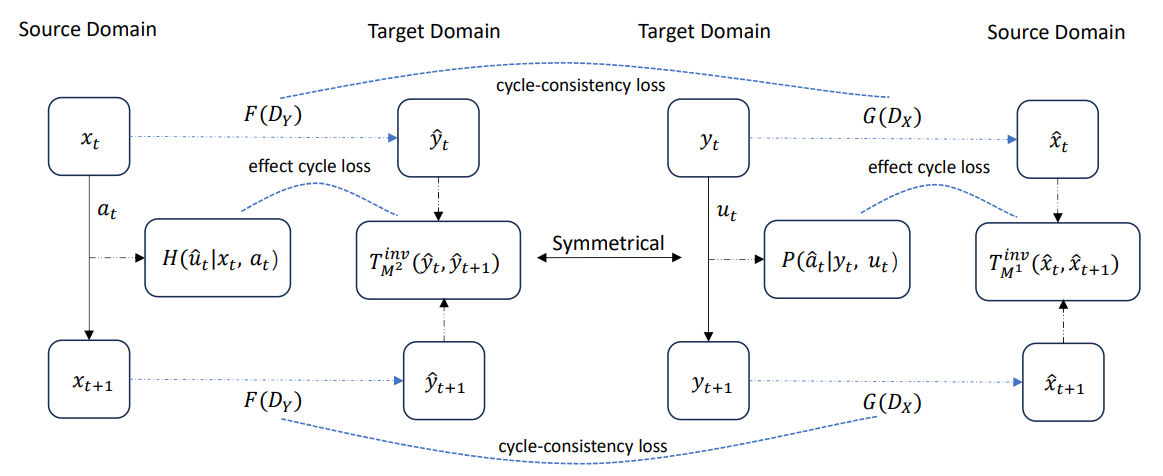

Method Overview

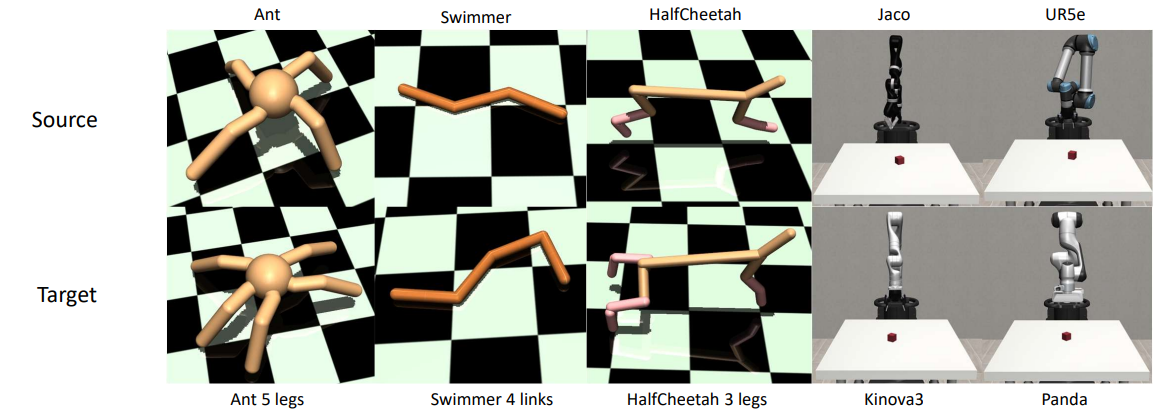

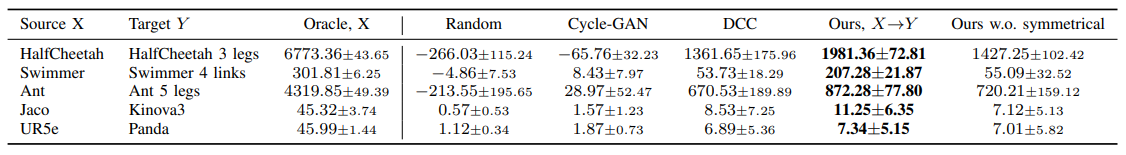

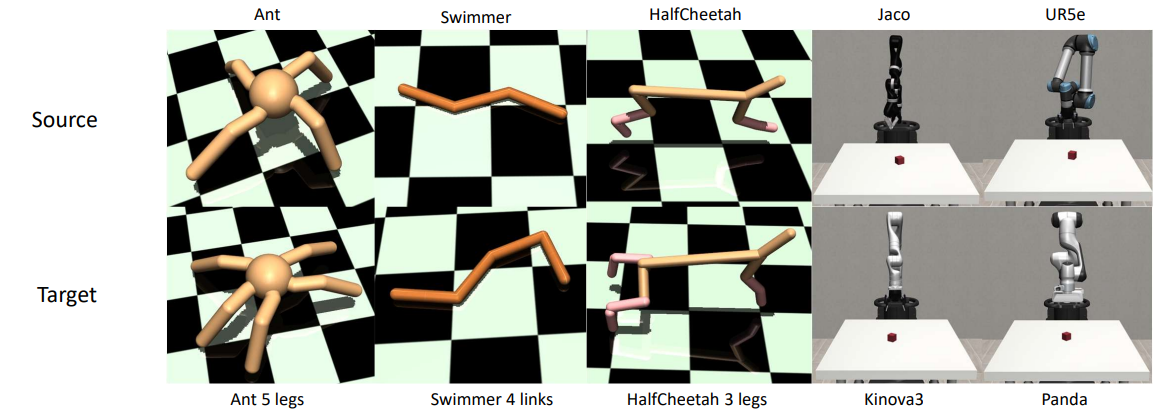

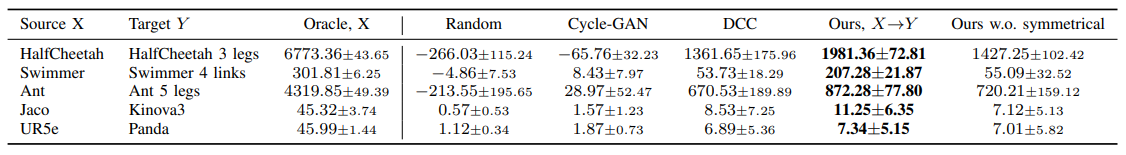

Experiment Results

International Conference on Robotics and Automation (ICRA), 2024

Training a robotic policy from scratch using deep reinforcement learning methods can be prohibitively expensive due to sample inefficiency. To address this challenge, transferring policies trained in the source domain to the target domain becomes an attractive paradigm. Previous research has typically focused on domains with similar state and action spaces but differing in other aspects. In this paper, our primary focus lies in domains with different state and action spaces, which has broader practical implications, i.e. transfer the policy from robot A to robot B. Unlike prior methods that rely on paired data, we propose a novel approach for learning the mapping functions between state and action spaces across domains using unpaired data. We propose effect cycle-consistency, which aligns the effects of transitions across two domains through a symmetrical optimization structure for learning these mapping functions. Once the mapping functions are learned, we can seamlessly transfer the policy from the source domain to the target domain. Our approach has been tested on three locomotion tasks and two robotic manipulation tasks. The empirical results demonstrate that our method can reduce alignment errors significantly and achieve better performance compared to the state-of-the-art method.

@misc{zhu2024cross,

title={Cross Domain Policy Transfer with Effect Cycle-Consistency},

author={Ruiqi Zhu and Tianhong Dai and Oya Celiktutan},

year={2024},

eprint={2403.02018},

archivePrefix={arXiv},

primaryClass={cs.RO}

}